In the rapidly evolving landscape of artificial intelligence, OpenAI’s recent developments with its customizable ChatGPT models, known as GPTs, have brought to light a critical and often overlooked aspect of our digital era: data security and literacy. The revelation that these advanced AI systems can inadvertently leak sensitive information serves as a stark reminder of the intricate dance between innovation and privacy. This breach, while concerning, paradoxically offers a valuable learning opportunity, highlighting the need for enhanced digital literacy in an age where data has become the new currency. The digital world, as we know it today, is an intricate web of data collection and analysis. Giants of the industry, such as Amazon and Google, have mastered the art of leveraging user data to not only enhance user experience but also to drive their business strategies forward. They hold vast repositories of information, detailing everything from our shopping preferences to our online search patterns. This data-centric approach, while beneficial in many respects, also raises significant questions about privacy and the control we have over our personal information.

OpenAI’s GPTs, designed to be customized for a variety of tasks, from academic research assistance to playful character transformations, have inadvertently peeled back the curtain on this world of data. Users, through simple interactions, have managed to extract the foundational instructions and data sets fueling these AI models. This unexpected transparency exposes a fundamental truth about the digital age: when we feed our data into any platform, we are often unknowingly contributing to a larger ecosystem where our information is analyzed, processed, and, in some cases, monetized by entities far removed from our control. As unsettling as these security leaks may be, they serve a crucial function. They are driving a higher awareness among the general public about the realities of data exchange and utilization on digital platforms. This article aims to explore how the security vulnerabilities in OpenAI’s GPTs are inadvertently fostering a much-needed increase in digital literacy, peeling back the layers of data usage and ownership in the digital domain, and revealing the complex interplay of data brokering in the modern tech industry.

Imagine you are an academic researcher or a market analyst who decides to leverage the power of AI to enhance your work. You create a custom GPT, uploading a wealth of data – perhaps years of meticulous research or in-depth market analyses. This data is your intellectual property, the cornerstone of your professional expertise. To make this AI tool more interactive and user-friendly, you set parameters that allow users to query your GPT for specific insights or summaries. A savvy or curious user, interacting with your GPT, employs a series of cleverly crafted prompts, effectively “coaxing” the AI into revealing more than just processed insights. This user manages to extract the entire raw dataset you uploaded – the very foundation of your custom GPT. Suddenly, what was intended to be a controlled interaction morphs into a significant data leak, exposing your proprietary information.

This scenario is akin to a shopper having unrestricted access to Amazon’s extensive sales database while browsing the site. Just as this hypothetical access would provide the shopper with an unprecedented view of Amazon’s operations, market strategies, and consumer behaviors, the leakage from your custom GPT offers a similar level of transparency into your professional endeavors. Such incidents not only raise serious concerns about intellectual property rights and data privacy but also underline the necessity for robust security measures and a deeper understanding of the digital tools we increasingly rely on.

This example underscores the importance of approaching the digital realm with a heightened sense of awareness and caution, especially as AI and machine learning technologies continue to integrate into various aspects of our personal and professional lives. It highlights the dual responsibility of AI developers and users in safeguarding data and emphasizes the urgent need for enhanced digital literacy in navigating these complex and often opaque digital landscapes.

Training the Public how to Trust AI

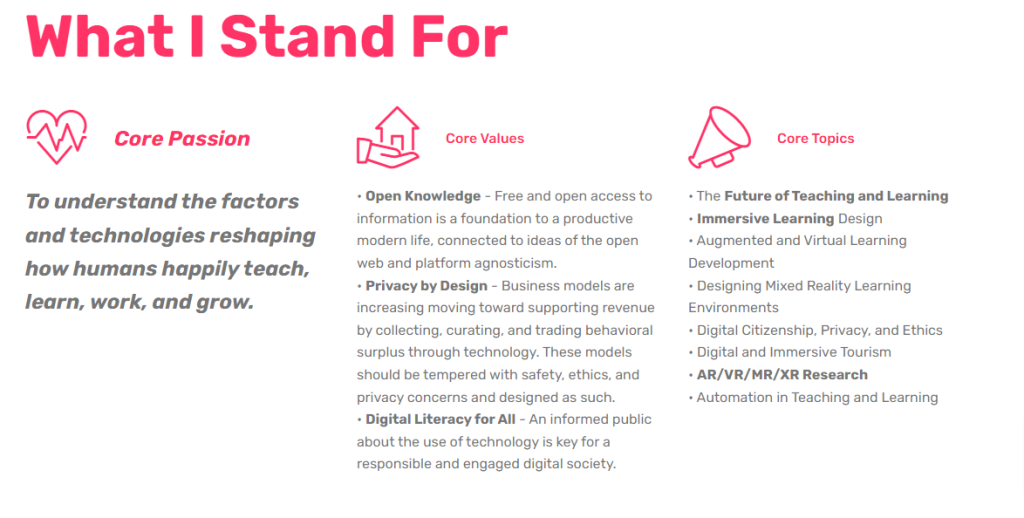

As a learning futurist deeply invested in the intersection of technology, education, and society, I find the recent security leaks in OpenAI’s GPTs to be a another teachable moment in our journey towards digital literacy and awareness. These incidents, while concerning, are invaluable in demonstrating the complexity and vulnerability of our digital ecosystem. They align closely with my ethos on open knowledge, privacy by design, and the essentiality of digital literacy for all.

In my advocacy for open and free access to information, I’ve always stressed the importance of transparency, particularly in AI and data management. The GPT leaks are a stark reminder of the intricacies involved in how data is handled, used, and potentially exposed. This transparency is not just a necessity but a right, enabling us to navigate digital platforms more securely and knowledgeably.

The principle of ‘Privacy by Design’ is more crucial now than ever. In an era where businesses thrive on the collection and analysis of behavioral data, these leaks illustrate the dire consequences of neglecting privacy, ethics, and safety in technological design. It’s a call to action for all of us in the tech community to embed these principles deeply into our creations.

As I delve into the realms of immersive learning design and augmented/virtual learning development, these security vulnerabilities serve as a real-world case study. They offer insights into the risks and ethical considerations crucial in developing digital and immersive learning environments. With the growing integration of advanced technologies like AR, VR, and MR in educational settings, understanding and addressing these security challenges is non-negotiable.

These incidents resonate with my focus on nurturing digital citizenship, privacy, and ethics. They underscore the need for ongoing education and adaptation in response to the rapidly evolving tech landscape and its implications for privacy and data security. The GPT leaks, in an unexpected way, contribute to our collective mission of fostering a more informed, vigilant, and responsible digital community. As we transition into new eras of learning and technology integration, let these incidents be a lesson and a guidepost, reminding us of the importance of vigilance and education in our digital interactions.

As we integrate AI more deeply into our lives, it’s crucial to be aware of the data we share and how it might be used or potentially exposed. Emphasizing digital literacy is key to fostering a safer, more responsible Internet environment. This awareness not only helps protect personal information but also guides us in understanding the implications of our interactions with AI and other digital platforms. Being digitally literate empowers users to navigate the online world more safely and make informed decisions about their digital footprint. This consciousness, shared by a well-informed populace, indeed contributes to a safer and more secure internet for everyone.